Summary

- Today’s news indicates the NHTSA probe into autopilot performance has widened.

- This widening has a chance to catch Tesla’s “big lie” regarding autopilot safety.

- This article explains the big lie and why it might be caught. This might have demand implications.

“So far, so good”

We’ve all heard about the Tesla (NASDAQ:TSLA) fatal autopilot crash, on which I wrote an article titled “A Detailed View Into The Tesla Autopilot Fatality,” which examined it in great depth.

We also all know that NHTSA launched a probe into it. However, today we got additional information on NHTSA’s investigation which can still be of relevance. I will explain why.

Tesla’s Defense Of Autopilot

Tesla’s defense of its autopilot feature rests on it repeating a big lie often enough. As the saying goes:

If you tell a lie big enough and keep repeating it, people will eventually come to believe it.

So what is this lie I speak about? Let me quote straight from Tesla’s blog(emphasis is mine):

We learned yesterday evening that NHTSA is opening a preliminary evaluation into the performance of Autopilot during a recent fatal crash that occurred in a Model S. This is the first known fatality in just over 130 million miles where Autopilot was activated. Among all vehicles in the US, there is a fatality every 94 million miles. Worldwide, there is a fatality approximately every 60 million miles. It is important to emphasize that the NHTSA action is simply a preliminary evaluation to determine whether the system worked according to expectations.

Why Is This A Lie?

It’s a lie because:

- When the death occurred autopilot had been used for less than 100 million miles, not 130 million miles.

- Autopilot is lane keeping and/or TACC. The issue is only with lane keeping and TACC put together, which certainly had racked up only a fraction of the less than 100 million miles on autopilot.

- Autopilot lane keeping is only used in safer-than-average roads and conditions, thus it makes no sense to compare it to accidents occurring on all roads and under all conditions.

- Autopilot-capable cars are much newer than the average car, thus much safer than the average car, autopilot or not.

- The Model S is a large 4-door sedan, thus much safer than the average car, autopilot or not.

- Tesla’s driver demographics are safer than average on account of age and income/intelligence, autopilot or not. (the exception here is gender, though)

- Tesla is sold in states and countries which themselves are safer than average (have less fatalities per mile than average), autopilot or not.

- The average fatality rates include motorcyclists and pedestrians, which account for ~1/3rd of fatalities in the US and more elsewhere in the world, which makes them directly non-comparable to the Tesla on autopilot. Indeed, the average stripped of this effect would all by itself render the Model S on autopilot less safe than average.

You take all of these effects together, and it’s obvious that a Tesla on autopilot is much less safe than the average comparable car driven by a comparable driver in comparable roads. Quite possibly it’s more than an order of magnitude more dangerous, though quantifying it is a job for a PhD thesis.

However, There’s More To It

Up until now, I haven’t said why the new NHTSA information, carried in a letter delivered by NHTSA to Tesla, is relevant to this matter.

It’s relevant, again, because of a couple of different reasons:

Because it speaks of a June 14 meeting with Tesla. Yet, Tesla only saw it fit to release this information to the market on June 30. This is of minor importance.

It’s also relevant because NHTSA is asking not just information on this particular fatal autopilot accident, but also on other accidents where autopilot might have been involved. This is of major importance.

This widening of the investigation can make a lot of difference regarding Tesla’s claims of autopilot safety (the big lie). It is so, because Tesla, as always, has seemingly taken liberties with what constitutes an autopilot-related accident.

Take for instance the accident where Arianna Simpson crashed into the back of another car while on autopilot. Simpson realized, too late, that the car was not going to slow down before impacting the car ahead. So she braked (too late, of course, the accident was already unavoidable at that point). So what does Tesla say regarding this (according to ArsTechnica.com)?

In contrast, Tesla says that the vehicle logs show that its adaptive cruise control system is not to blame. Data points to Simpson hitting the brake pedal and deactivating autopilot and traffic aware cruise control, returning the car to manual control instantly.

It isn’t a great stretch to thus believe that internally, Tesla won’t be classifying this as an “autopilot-related” accident. In a metaphor, we could say that if Tesla’s autopilot was throwing you off a tall building, according to this criteria it would still be perfectly safe and not causing any “autopilot-related” accidents, just as long as it disconnected moments before you hit the ground.

Obviously, accident data collected and classified according to such criteria would be mind-bogglingly misleading. It is here, that NHTSA’s widening of its autopilot probe can gain a lot of relevance. It can gain relevance because, if Tesla doesn’t pull the wool over NHTSA’s eyes, NHTSA can get data on the actual autopilot safety (to which it would still have to apply numerous statistical adjustments). How could Tesla still pull the wool over NHTSA’s eyes? Well, by providing it data only for accidents where autopilot was still engaged right on impact. Let’s hope NHTSA is more intelligent than that.

Conclusion

NHTSA’s probe into autopilot performance has widened. Initially, it was seen as just covering the autopilot fatality, whereas now NHTSA is trying to get a better grip on autopilot performance in general.

This widened NHTSA probe might well expose Tesla’s recurring untruth about the safety of its cars while on autopilot. This isn’t really a Tesla issue in the sense that other automakers having similar features will have similar problems, but Tesla is the only one actively trying to mislead the market regarding the feature’s safety. Furthermore, Tesla makes autopilot more of a selling point than other automakers do. Indeed, this whole episode is probably already having an actual impact on demand for Tesla cars, demand which judging from Tesla’s Model S sales during Q2 2016, Tesla can hardly spare.

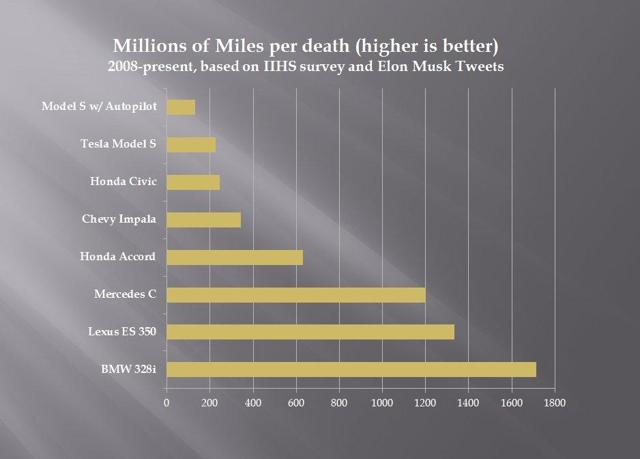

As an illustration on how Tesla is misleading regarding the safety of autopilot, I can’t resist reproducing the following slide:

Source: Eric’s feed on Twitter, based on IIHS data

This slide, too, has multiple reasons to be misleading (including not being comprehensive), but it still illustrates the point quite well.

Author: Paulo Santos

Twitter: @CFTR

YouTube: Citizens for the Republic

Facebook: @CitizensForTheRepublic

Website: CFTR.org